Mobile

Google enhances Maps, Search, Assistant, Translate

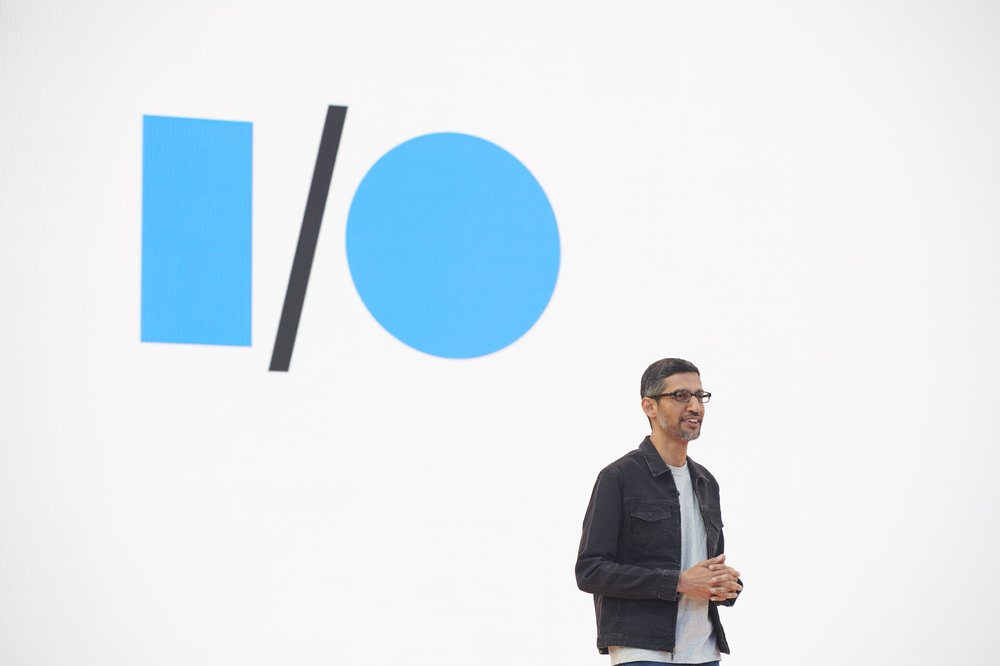

At Google I/O last night, the company announced several app ecosystem enhancements that South Africans can look forward to, writes BRYAN TURNER.

At the Google I/O event held at the Shoreline Amphitheatre last night, the company unveiled its latest Pixel devices and a ton of software enhancements for its software catalogue, including Maps, Search, Assistant, and Translate.

South Africans won’t be seeing the Pixel locally, except for users who like to jump through parallel import hoops. That said, the devices are impressive. The Pixel 6a, the latest budget device, stole the show. It houses the latest Tensor AI chip, developed by Google. It enables features like stronger image signal processing (for better photos) and the latest version of Google’s Magic Eraser photo editing tool, which enables users to remove unwanted subjects from photos. In the US market, it will cost $449

The Pixel 7 and 7 Pro were announced, and house the next generation Tensor chips. Pricing and availability weren’t revealed so we’ll have to wait for more information later this year.

The Pixel Watch was finally “revealed” after months of leaks and even being “forgotten” in a restaurant (we would also love to know who takes off their wearable and forgets it in a restaurant). The smartwatch features a nearly bezelless display, which is complemented by a digital crown. The biggest announcement of the wearable is its tight integration with Fitbit, which was recently acquired by Google.

Moving along to the most relevant announcements to South Africans: Google apps and machine learning enhancements.

Starting with the Google app itself, it will now allow users to search in more ways. Multisearch allows users to take a photo/screenshot of an item of clothing, for example, a yellow dress, and find dresses in online shops that resemble its style. Google goes a step further by adding the ability for a user to change the colour of that dress to see dresses in that style, but in a colour they prefer.

Multisearch is also getting “near me” functionality, so if a user spots a yummy looking dish on Instagram, they can take a screenshot of it, search it on Google, and find restaurants near them that serve that dish. These features are launching in the Google app later this year.

AR elements are being added to allow users to explore their environments and search for them live. In Google’s example, they showed an isle in a store that had several types of chocolate. Google’s image recognition can match those products to a database of online reviews and show the highest-rated ones overlaid on the products in augmented reality (AR). They also have filters to show attributes, like nut-free chocolate.

Maps got a huge overhaul with new features. Immersive View shows users what they can expect from a flyover view in major city centres, and even virtually walk into the popular restaurants and attractions (like how one virtually walks through the streets in Google Street View).

The company is also opening its ARCore Geospatial API, which enables Immersive and Live View technologies to be used in third-party apps. This can help end-users find parking with a mall’s app, navigate large airports, and play location-based games, all with the help of AR.

At the Google I/O press event, Gadget asked Miriam Daniel, VP of Google Maps, how these features will function on older devices.

“The feature is powered by Google Cloud’s immersive streaming technology,” said Daniel. “Any device that is capable of rendering HD images or views should be capable of offering immersive view. A lot of that heavy processing is done on the cloud and streamed instantly to the device.”

Google Assistant voice recognition is set to make natural conversations more understandable. It will now be able to understand the ums and ahs in user speech, as well as when words are drawn out while one is speaking. In their example, the assistant understood “Hey Google, play. The new song frommmmmmmmmmm…”, gave the user time to think, then the user said “Florence and the… something?”, and Assistant used machine learning to understand that the user had most of the context for “Florence and the Machine”.

Google Translate made breakthroughs with a new “zero resource translation”, which means Google’s AI can read text in a single language and learn the nuances of the language without knowing human translations. There will be support for 24 additional languages, spoken by over 300 million people. These include Sepedi and Xitsonga.

“It may sound magical and we’re amazed at it too,” said Isaac Caswell, research scientist at Google Translate. “We have one giant model that’s trained in all the hundred languages that have translated text and over a thousand that don’t have translated text. You can think of this model as a polyglot who is fluent in hundred languages, and he can read a stack of novels in a language he’s not seen before. You could imagine if he had the knowledge of knowing 100 languages, he could just piece together what’s going on in the language he’s never seen before.”

All these app and software updates will be rolled out through the course of 2022.