Robotics

CES 2025: Robots rule

the revolution

At the world’s biggest consumer tech show, one can barely move without bumping into a robot, writes ARTHUR GOLDSTUCK.

Share

- Click to share on X (Opens in new window) X

- Click to share on Facebook (Opens in new window) Facebook

- Click to share on LinkedIn (Opens in new window) LinkedIn

- Click to email a link to a friend (Opens in new window) Email

- Click to share on Reddit (Opens in new window) Reddit

- Click to share on WhatsApp (Opens in new window) WhatsApp

- Click to share on Pinterest (Opens in new window) Pinterest

A revolution is under way in gadgetry, but it would be easy to misread the trend if one merely scans the surface of the world’s biggest consumer electronics expo.

At CES, the Las Vegas event where it seems as if half the world’s technology companies launch their new products at the beginning of every year, one can barely move without bumping into a robot, or at least being invited to interact with one. That may sound a little like the last 10 years of CES, but this year is different. The robots are a little less cute, and a lot more intelligent.

That is not the real revolution, however. A massive shift has occurred in the software and the rules built into the robots. Thanks to generative AI, it is now a simple matter to make robots more interactive and seemingly intelligent. While AI has been touted at CES for at least a decade, it is the first time that any developer, innovator or manufacturer can bake it into products without having specific expertise in AI as such. The new skill that is revolutionising consumer electronics is the ability to understand just what AI can do for a product, what consumers would ideally want it to do, and how the interface should look and feel.

The result is that the likes of AI coffee machines and sleep aids are no longer novelties, but an expected norm. It also means that many tech companies, flush from the delight of joining the AI revolution, overexaggerate the significance of their accomplishment. A smart lawnmower, for example, is not merely a cool new product, but marks “a new era of smart life”. As a category, such devices have been with us for at least five years. However, AI means that the segment will expand rapidly, along with the hype.

Robots are an entirely different matter. Robots that can listen and talk back are like, so 2014 – the year Pepper the robot receptionist/waitron was launched in Japan. Robots that perform highly specific tasks, in the tradition of industrial robots that work production lines, are the 2025 norm.

For example, RoboSense, an AI-driven robotics company, hosted an online launch event titled “Hello Robot” to introduce not a robot, but “a universal development platform” built on a “humanoid robotic prototype: that can be used for advancements in vision, tactile sensing, mobility, and manipulation.

In one category alone, LiDAR (Light Detection and Ranging), RoboSense unveiled three solutions at CES it claims as world firsts:

- The EM4, the world’s first “thousand-beam” ultra-long-range automotive-grade digital LiDAR. Featuring 1080 beams and a detection range of up to 600 meters, EM4 provides vehicles with 1080P high-definition 3D perception, “pushing the boundaries of advanced intelligent driving and fully autonomous capabilities”.

- The E1R, claimed to be the world’s first fully solid-state digital LiDAR for robotics. Also designed with automotive-grade reliability, it features RoboSense’s proprietary SPAD-SoC and 2D VCSEL chips, with a compact aperture and 120° × 90° ultra-wide field of view. The company says it excels in obstacle avoidance, mapping, and navigation, and supports “industrial, commercial, and embodied intelligent robots across diverse operational environments”.

- The Airy is the world’s first 192-line hemispherical digital LiDAR, delivering a 360° × 90° ultra-wide field of view “in a compact design comparable to a ping-pong ball”. It boasts a detection range of 120 meters, 1.72 million points per second, and ±1cm precision, “making it ideal for robots requiring omnidirectional perception in complex scenarios”.

Other RoboSense products or “solutions” on display at CES include the Active Camera, an intelligent ecosystem comprising sensor hardware, computational cores, and AI algorithms that merge LiDAR signals and camera data for mapping and obstacle avoidance; and the second-generation dexterous hand Papert 2.0 with 20 degrees of freedom, incorporating hand-eye coordination.

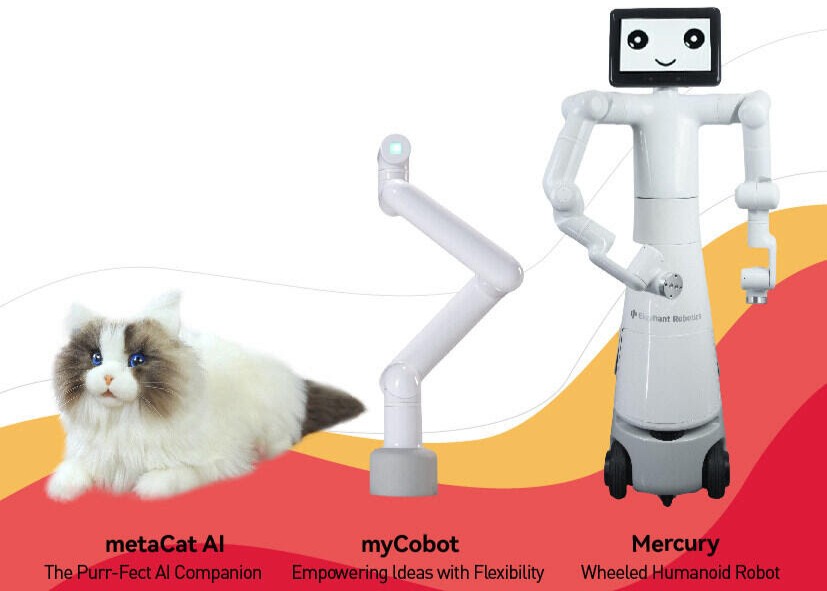

Elephant Robotics arrived at CES from Shenzen, China, with a slightly more, shall we say, clunky message: “Enjoy Robots World”. Like RoboSense, it displayed a wide range of robotic products, from educational collaborative robots to bionic companion robotic pets. It’s real value lies in the versatility of its products, intended for “creative projects, daily tasks, and production applications”. A variety of end “effectors” – including cameras, suction pumps, grippers, and dexterous hands – enhances the practicality of robotic arms and increases efficiency.

The stars of the Elephant show were two “commercial collaborative robots”:

- The myCobot Pro 630, a high-performance commercial robot equipped with a holographic device to provide an immersive and dynamic experience for commercial displays and exhibitions, enabling multi-angle movement and 3D visual effects.

- The Mercury X1, a universal wheeled humanoid robot featuring a mobile chassis with high-performance LiDAR and dual-arm robotic arms, can work seamlessly alongside humans to improve efficiency and expand commercial opportunities in sectors such as service, hospitality, education, scientific research, entertainment and smart homess.

Despite this hard-nosed approach to hardware, Elephant does succumb to the cult of cuteness. It is using CES to show off a new series of bionic robotic pets, with names that speak for themselves: metaCat AI, metaDog AI and metaPanda AI. The “AI bionic robotic pets” mimic the appearance, texture, sound, and experience of real animals. Not only are they “fulfilling people’s need for companionship and emotional comfort”, says Elephant, but they are equipped with AI models to “understand human language and emotions, providing a life-like interaction experience”.

The company is marketing the devices as being particularly beneficial for children, seniors, and individuals with autism or Alzheimer’s disease. They “help reduce feelings of loneliness, alleviate anxiety, and promote mental well-being”, and “their AI capabilities allow them to engage in dynamic, lifelike conversations, and even respond to emotional cues, fostering deeper connections with users”.

Do they really “represent a new frontier in human-robot interaction, where robotics not only enhance productivity but also improve emotional quality of life”? Perhaps that will be a more realistic promise when we come back to CES in 2026.

* Arthur Goldstuck is CEO of World Wide Worx and editor-in-chief of Gadget.co.za. Follow him on Bluesky on @art2gee.bsky.social.

Share

- Click to share on X (Opens in new window) X

- Click to share on Facebook (Opens in new window) Facebook

- Click to share on LinkedIn (Opens in new window) LinkedIn

- Click to email a link to a friend (Opens in new window) Email

- Click to share on Reddit (Opens in new window) Reddit

- Click to share on WhatsApp (Opens in new window) WhatsApp

- Click to share on Pinterest (Opens in new window) Pinterest

| Thank you for Signing Up |