Artificial Intelligence

AWS Summit: GenAI sees

for the unsighted

Among innovative demos in the GenAI Zone, one stood out for its advancements in accessibility, writes JASON BANNIER.

A new generative artificial intelligence (GenAI) model is expected to enhance the autonomy and safety of visually impaired individuals.

Demonstrated at the AWS Summit in Johannesburg last week, the innovative technology provides real-time image descriptions without internet access, powered by AWS cloud. It offers a significant step toward a more accessible future. The demonstration’s timing, aligning with the opening week of the 2024 Paralympic Games, highlighted its potential to enhance independence and wellbeing.

GenAI, AWS cloud, and helping the visually impaired

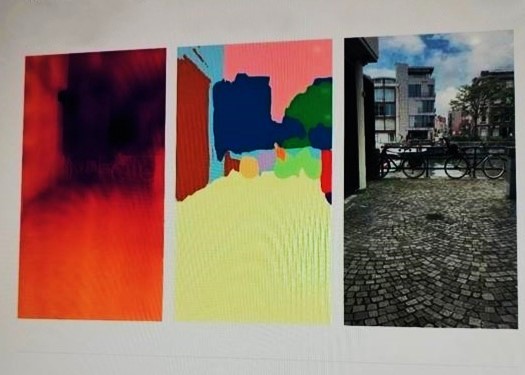

In the GenAI Zone of the AWS Summit expo floor, at an exhibit titled “Helping the Visually Impaired with GenAI”, AWS senior healthcare data scientist Marcelo Cunha told Gadget: “Imagine if you could use similar technologies used by self-driving cars to help the visually impaired. This demo shows how one can navigate around an environment leveraging this technology.”

The system, which is currently being developed, processes video footage to detect objects and generates a description, which is then conveyed through text-to-speech.

For example, says Cunha, if someone who is visually impaired must navigate within an unfamiliar city, the model can warn them by saying: “Be careful, there is a sharp object 1 metre away.”

In another example, the model provided the text: “The cobblestone pavement in the foreground could pose a tripping hazard at around 0.9 metres away. Please be cautious while navigating this area.”

Photo: JASON BANNIER

he model faces several challenges in achieving real-time operation, including the need to function without internet access, minimise bias, and reduce lag. To address these, it is being developed to run locally by integrating multiple models.

“Another challenge to running in real time is balancing precision and speed,” says Cunha. “There is a huge trade-off to make the models accurate and fast.”

The model leverages AWS Cloud and several AWS services: a Computer Vision Foundation model in SageMaker predicts distances and detects objects using a monocular camera, while Large Language Models in Amazon Bedrock, which can be deployed at the edge, provide real-time scene descriptions. These descriptions are then converted to audio narrations using Amazon Polly.

Photo: JASON BANNIER

Racing meets GenAI

DeepRacer, a fully autonomous 1/18th scale race car driven by reinforcement learning, also made an appearance in the Gen AI Zone. First revealed in 2018 at AWS re:Invent in Las Vegas, DeepRacer is both a demonstration and teaching tool for machine learning (ML).

A competition at AWS Summit provided an engaging and immersive way for people to learn about ML and AI. The car is designed to be a gamified, fun and accessible way for developers, engineers, and ML enthusiasts to get hands-on experience with reinforcement learning.

The goal is to develop highly capable reinforcement learning models that can navigate complex racing environments with speed and precision, showcasing the power of AI for autonomous control.

Along the way, participants and onlookers gained insights into the challenges and complexities of deploying AI systems in the real world, where factors like sensor noise, environmental variations and vehicle dynamics must be considered.

Photo: JASON BANNIER

GenAI Zone highlights

AI Karaoke: In a demo, users complete a prompt with a microphone and choose the best response. Powered by Amazon Bedrock, Amazon Transcribe and Amazon ECS Anywhere, it is designed to introduce users to several key ideas in GenAI system engineering, including text-to-image generation and visual Q/A, importance of creating a data flywheel from human feedback, and mitigating challenges of hallucination and safety

PartyRock: The model offers a creative space for users to build AI-generated applications within a dynamic playground environment, powered by Amazon Bedrock. The platform allows users to experiment with Gen AI, and provides them with an educational experience. Through hands-on engagement, users can explore the possibilities of Gen AI, learn about model training, and understand the real-world applications and implications of AI-generated content.

2D Robot Designer: Users create personalised avatars by uploading images and generating customised designs based on text prompts. Using Gen AI models, this tool offers a hands-on experience where users can see how AI transforms input data into distinct, creative outputs. It is an engaging way to learn about the capabilities of AI in image processing and customisation, offering insights into how ML models can be fine-tuned to generate personalised visual content.

* Visit the AWS website here.

* Jason Bannier is a data analyst at World Wide Worx and writer for Gadget.co.za. Follow him on Twitter and Threads at @jas2bann.