Product of the Day

Red Hat updates enterprise AI platform

Red Hat AI 3 aims to support production-ready AI through distributed inference and a foundation for next-generation intelligent agents.

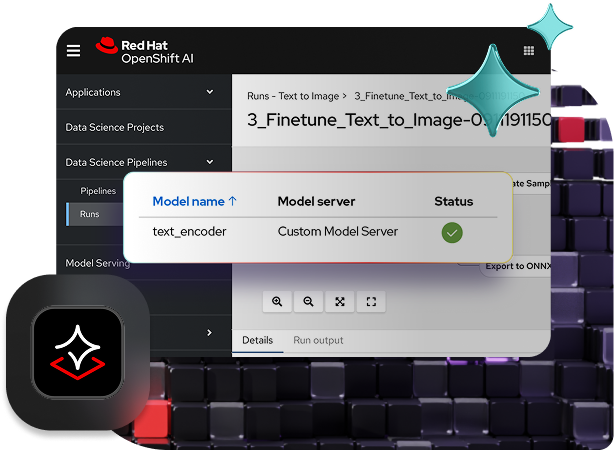

Red Hat, a hybrid cloud technology company, has updated its enterprise AI platform, Red Hat AI 3. The new version integrates Red Hat AI Inference Server, Red Hat Enterprise Linux AI (RHEL AI), and Red Hat OpenShift AI to streamline large-scale AI inference.

The platform is designed to help organisations transition workloads from proof-of-concept to production and enhance collaboration on AI-enabled applications.

“As enterprises scale AI from experimentation to production, they face a new wave of complexity, cost and control challenges,” says Joe Fernandes, VP and GM at Red Hat for AI Business Unit. “With Red Hat AI 3, we are providing an enterprise-grade, open source platform that minimises these hurdles.

“By bringing new capabilities like distributed inference with llm-d and a foundation for agentic AI, we are enabling IT teams to more confidently operationalise next-generation AI, on their own terms, across any infrastructure.”

Approximately 95% of organisations fail to see measurable financial returns from around $40-billion in enterprise AI spending. This is revealed in The GenAI Divide: State of AI in Business report from the Massachusetts Institute of Technology NANDA project.

Red Hat AI 3 is designed to address these challenges by providing a unified platform for managing AI workloads across hybrid, multi-vendor environments. It enables organisations to scale and distribute workloads efficiently while improving collaboration on advanced AI applications, including agent-based systems. Built on open standards, the platform supports a range of models and hardware accelerators, including datacentres, public cloud, and edge environments.

Enterprise AI inference

As organisations move AI initiatives into production, Red Hat says the emphasis shifts from training and tuning models to inference, the doing phase of enterprise AI. According to the company, Red Hat AI 3 emphasises scalable and cost-effective inference, by building on the vLLM and llm-d community projects and Red Hat’s model optimisation capabilities to deliver production-grade serving of large language models (LLMs).

Red Hat has announced the general availability of llm-d in OpenShift AI 3.0, a framework designed to enhance how large language models operate within Kubernetes environments. The system supports intelligent distributed inference by leveraging Kubernetes orchestration and vLLM performance, alongside open-source components such as the Kubernetes Gateway API Inference Extension, NVIDIA’s NIXL low-latency data transfer library, and the DeepEP Mixture of Experts communication library.

Red Hat says this allows organisations to:

- Lower costs and improve response times with intelligent inference-aware model scheduling and disaggregated serving

- Deliver operational simplicity and maximum reliability with prescriptive “Well-lit Paths” that streamline the deployment of models at scale on Kubernetes.

- Maximise flexibility with cross-platform support to deploy LLM inference across different hardware accelerators, including NVIDIA and AMD.

llm-d extends vLLM from a single-node, high-performance inference engine into a distributed, scalable serving system that integrates closely with Kubernetes. It is designed to deliver predictable performance, support measurable ROI, and improve infrastructure planning. These enhancements aim to address the demands of managing variable LLM workloads and deploying large models such as Mixture-of-Experts (MoE) architectures.

Unified platform

Red Hat AI 3 provides a unified and adaptable platform designed to support the development of production-ready generative AI solutions. It aims to enable collaboration and streamlines workflows across teams by offering a single environment for platform and AI engineers to implement their AI strategies.

Red Hat says new capabilities aimed at improving productivity and efficiency to support scaling from proof of concept to production include:

- Model as a Service (MaaS) capabilities build on distributed inference and enable IT teams to act as their own MaaS providers, serving common models centrally and delivering on-demand access for both AI developers and AI applications. This allows for better cost management and supports use cases that cannot run on public AI services due to privacy or data concerns.

- AI hub empowers platform engineers to explore, deploy and manage foundational AI assets. It provides a central hub with a curated catalog of models, including validated and optimised gen AI models, a registry to manage the lifecycle of models and a deployment environment to configure and monitor all AI assets running on OpenShift AI.

- Gen AI studio provides a hands-on environment for AI engineers to interact with models and rapidly prototype new gen AI applications. With the AI assets endpoint feature, engineers can easily discover and consume available models and MCP servers, which are designed to streamline how models interact with external tools. The built-in playground provides an interactive, stateless environment to experiment with models, test prompts and tune parameters for use cases like chat and retrieval-augmented generation (RAG).

- New Red Hat validated and optimised models are included to simplify development. The curated selection includes popular open source models like OpenAI’s gpt-oss, DeepSeek-R1, and specialised models such as Whisper for speech-to-text and Voxtral Mini for voice-enabled agents.

Next-generation AI agents

AI agents are expected to significantly influence application development, with their autonomous and complex workflows creating increased demand for scalable inference systems. The Red Hat OpenShift AI 3.0 release expands support for agentic AI through enhanced inference capabilities and new features focused on agent management.

Aiming to simplify the creation and deployment of AI agents, Red Hat has added a Unified API layer built on the Llama Stack framework, aligning development with industry standards such as OpenAI-compatible LLM interface protocols. The company has adopted the Model Context Protocol (MCP), an emerging standard designed to improve interoperability by facilitating seamless interaction between AI models and external tools.

Red Hat AI 3 includes a modular and extensible toolkit for model customisation, developed from InstructLab functionality. The toolkit provides specialised Python libraries that enable flexibility and control, supported by open-source components such as Docling for data processing, which converts unstructured documents into AI-readable formats. It offers a framework for synthetic data generation, an LLM fine-tuning hub, and an integrated evaluation hub for monitoring and validating model performance. This aims to help engineers in achieving more accurate and contextually relevant outcomes using proprietary data.

* To learn more about Red Hat AI 3, visit the website here.